![Unlocking the Mystery: How AI Technology Works [A Fascinating Story, Useful Information, and Eye-Opening Statistics]](https://thecrazythinkers.com/wp-content/uploads/2023/05/tamlier_unsplash_Unlocking-the-Mystery-3A-How-AI-Technology-Works--5BA-Fascinating-Story-2C-Useful-Information-2C-and-Eye-Opening-Statistics-5D_1683030395.webp)

1. What is Machine Learning?

Machine Learning (ML) is the foundation of artificial intelligence technology. It involves teaching computers to think independently by providing them with large amounts of data which they can use to learn patterns or make predictions without being explicitly programmed what action to take when presented with specific inputs.

For instance, if you want a computer program to identify images of cats within pictures it hasn’t seen before; you would feed it hundreds or thousands labeled photos containing cats until the program learns how it should detect feline features on its own.

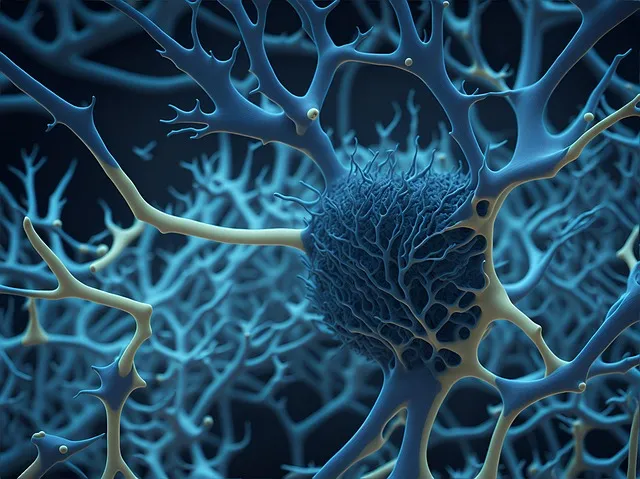

2. How Do Neural Networks Relate To Artificial Intelligence?

Artificial Neural Networks are computing systems modeled after the biological neurons found in living organisms’ brains; hence their name neural networks! They’re made up of layers upon layers of interconnected processing nodes arranged hierarchically (in an Input Layer -> Hidden Layers-> Output layer architecture), each responsible for making decisions based on incoming information passed down from connected nodes above.

Neural networks help machines make sense out-of-data by analyzing different pieces together just like humans link concepts together and infer new knowledge while unpicking essential abstract details at play throughout various stages unlike classic algorithms designed for binary responses

The applications range from smart cities where algorithms manage traffic flow during peak hours enabling companies increase productivity via optimization strategy by predicting maintenance issues before they occur based on real-time data analysis, online retail giants like Amazon can send personalized recommendation engines which use previous history and browsing behavior to suggest new products or services.

AI technologies face a variety of limitations that impact their development trajectory even today; such as missing context when solving problems due to not understanding some crucial aspects such as societal norms implications or ethical considerations around various decisions being made by them without human intervention close at hand. There’s also an inherent bias issue (Algorithmic Bias) reflected through decision-making processes themselves relying solely on historical datasets instead of considering potential biases within this dataset collection process itself.

5. Conclusion

Artificial Intelligence technology is bringing about positive changes across all industries, providing businesses with insights and capabilities previously unavailable while benefiting people via improved healthcare accessibility worldwide leading to better care delivery mechanisms across geographies dependent upon these advancements! As we continue advancing technology’s frontier into unknown territories filled with meta-challenges surrounding ethical concerns related directly connected realities needing continuous monitoring over timeallowing iterative improvements ultimately leading toward becoming useful tools serving society well improving countless numbers’ lives globally.

1. Machine learning forms the backbone of AI

At its core, artificial intelligence relies on machine learning algorithms to make decisions based on patterns and data points. Machine learning involves feeding a program large amounts of data – either labeled or unlabeled – so that it can “learn” from these examples and generate predictions or decisions based on new input down the line. The goal here is essentially to create self-learning software that can continuously improve its performance with each iteration.

2. Natural language processing enables communication

As anyone who’s ever used a voice assistant knows, natural language processing (NLP) plays an important role in making human-machine interaction effective. NLP refers to computer techniques designed to analyze human language such as text, speech or dialogue by extracting meaning from them using information retrieval rules combined with computational linguistics processes.n example : Chatbots which rely heavily on NLP tools are programmed to understand conversational cues like tone and context so they can effectively respond with meaningful replies.

3. Neural networks perform complex tasks

Another key component of artificial intelligence is neural networks- Deep Learning Techniques inspired by networked neurons found in biological brains., A Neural Networkconsists of layers structured mapping inputs through hidden nodes/ units ultimately leading towards outputs.. Trained using vast datasets along with backpropagation methods helps evaluating error gradients at output level returning feedback signals throughout preceding layers aiding adjustments optimally till desired results achieved.Allowing machines improved decision-making skills covering image recognition/chatbot application/authentication access systems/more.

4. Computer vision powers visual perception

Computer Vision fuses together digital imaging and machine learning algorithms to create systems which process, analyse and understand digital image data. Machine learning algorithms are used here too where more manual work of adjusting the neural networks is required for such tasks like facial recognition or object detection requiring many layers with several thousand nodes each in sophisticated construction.

One key thing to keep in mind about artificial intelligence technology is that it’s incredibly versatile: from identifying cancer cells through radiological images; predicting the arrival time of a train based on traffic flow patterns; facilitating credit decisions by financial institutions against their customer profile- these are just some examples . Virtually every industry can take advantage of this rapidly evolving tech so long as large quality datasets covering profound insights available optimize preparing / carrying out machine-learning cycles suggesting valuable trends-based insights aid sound decision making levels belonging entities involved.

Wrapping Up

2. Natural Language Processing- Another major component involves natural language processing (NLP), a field dedicated solely to human speech recognition and text analysis by computer systems.

3. Deep Learning- A subset of machine learning uses neural networks equipped with layers that help computers identify hidden connections between vast amounts of data points.

4. Computer Vision – This one is all about object recognition: teaching machines how to differentiate between various items in digital images or video footage.This allows for identification of anything from car plates numbers at a distance,to people’s faces in security cameras

How do they work together?

All four areas complement each other creating end-to-end solutions;

For example When you interact with SIRI using your voice utterance it processes your sentence not only as individual words but also takes into account the context behind them ,using NLP,and outputs predicted answers .And Those answers might have been retrieved because Siri used real world examples “learned” via machine Learning techniques.

A Car manufacturer could use computer vision combined with Deep Learning models in order after analyzing videos/pictures taken on test drives ,to reduce accidents predicting problematic circumstances such as someone turning blindly given certain conditions..and if there was an accident perhaps using similar historical recorded video footage to determine root causes and improve driving conditions for the future.

Two terms frequently used when it comes to Artificial Intelligence are Machine Learning (ML) and Deep Learning (DL). Although both techniques have roots in artificial neural networks – computing systems modelled after the human brain – they differ quite significantly from one another.

In this blog post, we will explore how these two machine learning approaches work to enable you as a business owner or manager make an informed decision when deciding on the best solution for your company.

What is Machine Learning?

Machine Learning is a subset of artificial intelligence. It involves training algorithms using large amounts of data sets consisting of input and output variables so that the machine can learn how to recognize patterns within those inputs to produce predictable outputs with maximum accuracy. There are different types under ML such as supervised, unsupervised, semi-supervised and reinforcement learning; each designed for specific use cases.

Supervised learning algorithms train models by providing annotated examples manually marked by human experts: someone goes through all the information ahead of time and labels every sample accordingly. The algorithm then learns off these labelled samples enabling it to label new ones accurately based on insights gained from its understanding of similarities between groups that were flagged together correctly previously.

On successful completion of an optimization process like gradient descent, three primary features—a trained weight coefficient vector W for each neuron layer—are created along with hidden layers necessary for iterative calculations in your deep neural network architecture design experimentations over various epochs or iterations until reaching convergence where there’s no significant change expected anymore.

What is Deep learning

Deep Learning refers specifically to Neural Networks made up multiple layers arranged in hierarchical order starting from raw input data till final predictions are projected onto some target variable(s). Each level performs a different kind of transformation on the input data resulting in a representation based on multiple levels of abstraction which can detect expressions or features that are difficult to identify using conventional machine learning approaches.

Deep Learning is modeled after the human brain’s structure, where neural networks learn from examples and produce complicated outputs by executing tasks through memorization until it becomes efficient enough to make predictions with high accuracy rates.

Neural Networks consist of an input layer followed by several hidden layers separated by another layer called an activation stage (commonly ReLU). These are responsible for optimising performance metrics such as higher accuracy, less loss during training but requires huge processing power & computational resources compared to traditional ML models relative to database size requirements due below:

•Hardware configuration – Deep learning needs lots of GPUs processing power/storage/memory etc.

•Datasets used – DL algoritms need much more larger dataset than normal algorithms because single layer model will not give us accurate result.

While deep learning technically falls under machine learning, there is little doubt that Deep Learning is vastly superior when dealing with large amounts of unstructured information like natural language processing and speech recognition since it uses multi-layered neural networks that analytically work on contextual levels improving its performance over time thereby predicting near-perfect results.

Machine Learning Vs. Deep Learning: Which One To Choose?

In summary, Machine Learning works well when you have labeled datasets already available helping machines recognize patterns within them giving output predicted values rather accurately; whereas deep learning achieves even better results given rich data sets which require more powerful hardware configurations skilled experts drawn from able research groups staying ahead cutting-edge technology trends being developed constantly making sure they keep up-to-date our ever-growing technological landscape.

Artificial Intelligence (AI) is revolutionizing the way we live and work. It has transformed industries such as healthcare, finance, education, and transportation by providing better insights, prediction accuracy, personalized services, and automation.

Data Collection

Collecting quality training data can be challenging because of factors like privacy concerns, bias issues, incomplete records or poor-quality sources that need cleaning up before use.

However tedious this process may sound? but it’s worth investing time & effort into getting your hands-on clean labelled datasets covering all edge cases so that further down stream processes don’t suffer due to the lack of available quality inputs later.

Data Preprocessing

Once we have collected our stored dataset(s), We must ensure they are well groomed untill ready-to-use format prior feeding them into our respective ML Algorithms/Models’ pipelines/batches; As-we-make-noise-about “Garbage In Garbage Out”.

As humans also tend to misinterpret Data inputs:) Proper preprocessing steps should include any necessary roles taken care during encoding non-numerical discrete values(categorical variables conversion) threshold based feature reduction/rescaling methods based upon use case such MinMax Scalers/Normalization).

This will help optimize downstream processing times by reducing computational overheads associated with these operations at run-time.

Data Analytics

Analyzing the dataset, visualization of latent patterns or discrepencies helps determine insights that one wish to learn from data. This is where we plot histograms and do Exploratory Data Analysis (EDA) on a proptypical level.

The EDA can help in identifying which features are correlated, how useful they may be for model training(i.e signify strong predictive power), or detect any presence of outliers/anomalies(usually handled using statistical techniques such as Z-scores ranges(Robust scaling)/ IQR score range/ Hampel’s Test).

This step also involves creating customized datasets called Feature Engineering; these involve reducing redundancy by selecting just those candidate features that have maximum impact on our dependent variable – leading to better accuracy whilst avoiding ‘overfitting'(fits complexities more than necessary)

Machine Learning & Modeling:

Once preprocessed and analysed- The final stage includes applying Machine learning algorithms/Models utilizing some sort-of splitting procedure into Train/Test subsets.These models will then try to identify correlations between inputs and outputs within the Training subset.

However, its important to avoid Over Fitting during this process(set your priors carefully!!). While iterating across diffrent hyperparameters settings affinities(Learning Rate/Epsilon_tol/Trees depth etc))best suited for your problem domain until you achieve optimal results making sure it does not overfit nor under fit target class(es).

Model Validation & Deployment:

Finally, Testing Metrics are employed to measure performance KPIs(Accuracy/Sensitivity/Precision/MCC /ROC/AUC/F-Beta Score etc.)of developed model predictions on a validation set. Applying cross-validation procedures/test-runs against independent chosen samples previously.

In conclusion:

All the phases mentioned above highlight the significance and interdependency of data-driven techniques to Machine Learning algorithms in perticular with introduction of advanced Deep learning Techniques. Hence Maintaining/updating/opting optimal dataset choice are necessary ingredients herein.

Together they help automate processes better, free-up human intervention time meanwhile providing more precise decisions/Actionable insights with reduced scope for uncertainties and ever-decreasing error margins.

Ultimately resulting into increased productivity/reduced costs whilst streamlining business functions as a whole etc – Thus successful embodiment of state-of-the-art ML/AI technologies.

In the world of medicine, AI-powered robots are helping physicians perform complex surgeries with ease. For instance, in 2016, a team of doctors at Children’s National Health System in Washington successfully operated on an infant suffering from heart disease using a robot named Starfish Medical’s Triton Digital Delivery System. The robotic tool not only helped surgeons operate more accurately but also minimized pain and recovery time for the patient.

Besides healthcare and finance sectors, education industry isn’t far behind when it comes to adopting artificial intelligence either – one fascinating use case is proctoring exams remotely using online systems equipped with facial recognition technology Even well-known standardized tests in-person like GMAT/GRE now come integrated with adaptive testing algorithm along these lines which adjust difficulty levels based on test-taker’s skill level reducing redundancy enabling personalized assessments

However this transformation doesn’t necessarily mean everything will be automated going forward – As professionals in all fields must still continue to do their job flawlessly implementing any new technology innovation whether out-of-the-shelf or novel means having proper training paired up with critical thinking skills understanding how services interactions integrate together so iterations over time succeed ideally resulting optimization towards better outcomes while not alienating human users involved

To conclude: Artificial Intelligence continues impacting society positively thanks largely due unprecedented democratization possibilities seen wiith API ecosystem empowering businesses including small/medium ones bringing accessible benefits of this technology forward. AI’s transformative effects will be felt across multiple industires and by a wide range of stakeholder groups: from end-users to entrepreneurs, financiers or social innovators – end question being not “if” but rather “when” with up-and-coming software extensions more creatively addressing complex problems confronting our world CBD products trialled using algorithmic study extended over time resulting better formulations optimizing efficacy for pain treatments as another noteworthy example. Ultimately the result is efficiency optimization reducing costs at the individual level while still maintaining high standards – helping guarantee safe road ahead alongside exponential growth rates without sacrificing quality service delivery through wider accessibility based on reproducible Industry-proven implementation patterns informed by keenly aware professionals who constantly learn & adapt.%

| Concept | Description |

|---|---|

| Machine Learning | AI learns from data by using algorithms and statistical models to make predictions or decisions. |

| Neural Networks | AI systems that attempt to simulate the behavior of the human brain by learning from massive amounts of data. |

| Natural Language Processing | AI technology that processes and understands human language, allowing for human-like interactions and language-based tasks such as chatbots and voice assistants. |

| Robotics | AI technology that can program machines to perform tasks independently, leading to the development of autonomous robots. |

| Computer Vision | AI technology that enables computers to interpret and understand visual information, including images and videos. |

| Expert Systems | AI systems that can solve complex problems by using expert human knowledge to make decisions and provide solutions. |

Information from an expert

Historical fact:

The concept of artificial intelligence and its development can be traced back to the mid-20th century when computer scientist John McCarthy coined the term “artificial intelligence” during a Dartmouth Conference in 1956.

![Unlocking the Power of Social Media Technology: A Story of Success [With Data-Backed Tips for Your Business]](https://thecrazythinkers.com/wp-content/uploads/2023/05/tamlier_unsplash_Unlocking-the-Power-of-Social-Media-Technology-3A-A-Story-of-Success--5BWith-Data-Backed-Tips-for-Your-Business-5D_1683142110-768x353.webp)

![Revolutionizing Business in the 1970s: How Technology Transformed the Corporate Landscape [Expert Insights and Stats]](https://thecrazythinkers.com/wp-content/uploads/2023/05/tamlier_unsplash_Revolutionizing-Business-in-the-1970s-3A-How-Technology-Transformed-the-Corporate-Landscape--5BExpert-Insights-and-Stats-5D_1683142112-768x353.webp)

![Discover the Top 10 Most Important Technology Inventions [with Surprising Stories and Practical Solutions]](https://thecrazythinkers.com/wp-content/uploads/2023/05/tamlier_unsplash_Discover-the-Top-10-Most-Important-Technology-Inventions--5Bwith-Surprising-Stories-and-Practical-Solutions-5D_1683142113-768x353.webp)