![Unmasking the Truth: How AI Deepfake Technology is Revolutionizing the World [And What You Need to Know]](https://thecrazythinkers.com/wp-content/uploads/2023/04/tamlier_unsplash_Unmasking-the-Truth-3A-How-AI-Deepfake-Technology-is-Revolutionizing-the-World--5BAnd-What-You-Need-to-Know-5D_1681644428.webp)

Short answer: Ai deepfake technology

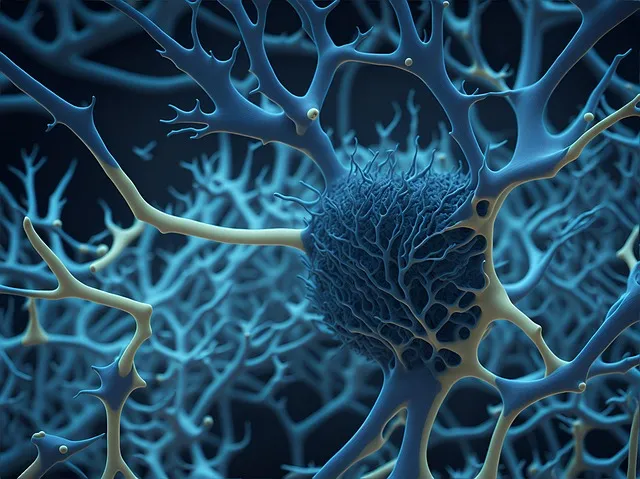

The world of technology is evolving at a remarkable pace, and one of the most recent advancements in this field is AI-generated deepfakes. These are videos or images that have been manipulated to appear legitimate but actually feature someone else’s face, using neural networks to replace an individual’s face with another.

Creating a deepfake involves three significant stages: data gathering, training algorithms, and finally generating output. Here’s our step-by-step guide on how you can create your own professional-looking deepfake:

Step 1: Data Gathering

The quality of the final output heavily depends on the availability of high-quality input data; therefore collecting original materials through various online resources is crucial. Specifically for facial recognition tasks related to creating accurate replacements in ASI generated fake content these datasets should be relatively precise from angles able to capture detailed information such as movement tracking technology.

Step 2: Training Algorithms

Once you’ve gathered enough relevant material – specifically headshot imagery possible from multiple different perspectives – it’s time to train the machine learning algorithm involved in making realistic swaps between faces seamlessly.. Experiments like autoencoders require thousands upon thousands of iterations manually fed into codes designed intelligently informed by neural nets trained on very basic low level image features like edges and gradients presented repeatedly within complex arrangements and structures.In plain english what we mean is- you’ll need specialized knowledge coupled with good access computer power necessary for handling large amounts training data rapidly so that performance scales optimally over millions if not tens-to-hundreds-of-millions-of samples per data point alongside comprehensive base layer optimization.

Step 3: Generating Output

After the model learns associations between real human faces changes involving transferred identities will commence.The scope includes efficiently computationally managing imperfectly structured visual frames while matching attributes associated with pre-recorded video recordings such as eye blink rate mouth moving gap detection synchronization visages etc., allowing us meticulously study dimensions within domain creations ensuring maximal fidelity overall.Leveraging generative adversarial networks ensures high levels of accuracy while constructing realistic substitutes models in data-generating manner especially that driven by the explosion model modifications within transfer learning’s overall application offerings when optimizing model via training transitions between homogeneous and similar datasets superimpose specific shapes upon distinct human faces.Read more articles for a deeper understanding of the details involved when creating deepfake videos.

The use of Artificial Intelligence (AI) for creating deepfake videos has become increasingly popular in recent times. These fabricated videos can be created with great accuracy and sophistication, which has raised concerns about the potential misuse of this technology.

As a result, several questions have arisen about how these deepfake videos work and their implications on society. In this article, we’ll answer some frequently asked questions about AI-powered deepfakes.

1. What is Deepfake Technology?

Deepfake technology refers to the use of artificial intelligence algorithms to create manipulated or altered visual and audio content that appears to be real but is actually fake.

3. Why Is It Considered Dangerous?

Deepfakes can easily mislead individuals since they’re hard to distinguish from genuine videos given their high-quality output.Phishing attacks may also become more sophisticated using fraudulent propaganda material based upon current news events

4.How Can You Spot A DeepFake?

While it’s becoming increasingly difficult to spot a well-crafted deepfake video at first glance, there are certain characteristics worth noting as red flags; unusual blinking patterns within characters involved or utilizing heavy filters like Snapchat masks amongst other traits indicating an editing operation took place.

5.What Are The Implications Of Using DeepFakes In Society?

The danger posed by fraudulently fabricated impersonations enables targets for cybercrime towards being duped into transferring resources (time/money/etc.) sharing sensitive information/confidential materials completely faking another’s identity must not get tolerated! At best people could lose privacy through invasive surveillance while governments impose harsher controls over free flow communication channels otherwise relied on

In summary , Deepfakes represent one example of rapidly evolving technologies that have a potential for misuse particularly in the hands of cybercriminals. Being able to detect and deter such malicious activities are essential.components of broader strategies aimed at protecting society from nefarious actors. Keeping abreast with updates on these trends ultimately benefits stakeholders across all industries by providing them with insights into current developments they won’t find elsewhere.

As exciting as this may sound, there has been growing concern regarding its ethical implications. It’s now more important than ever before for society to have discussions on how best to regulate these emerging technologies. There are two main reasons why deepfakes are causing controversy – first and foremost being the potential misuse by malicious actors for propaganda purposes or creating fake news – secondly, ‘The lack of transparency involved with creating Deepfakes’.

Deepfakes bring up many important considerations surrounding privacy and data security. The use of personal information without consent raises concerns about abuse towards individuals’ right; however, This issue also covers legal systems potentially taking DeepFake evidence in court where authentication isn’t justifiable.

While some argue that regulation is necessary to prevent harm caused by deepfake misinformation campaigns, others point out that restricting these innovations could hinder research efforts aimed at improving society through improved visual recognition capabilities or other benefits stemming from facial recognition algorithms including health detection.

Another point worth debating would be Accountability: Who will take responsibility for misused drones? Will it fall upon creators who make robust models? Or should government implement stricter policies requiring thorough certification processes?

1. What are Deepfakes?

Deepfakes refer to synthetic media created using artificial intelligence techniques in which an individual’s likeness or voice is replicated and implanted into another video or audio clip without their consent. It involves combining and superimposing existing images onto source material by employing machine learning algorithms that analyze patterns from data provided.

2. The level of sophistication

3. Upcoming ethical implications

There is already rising concern over how these technologies may be used negatively—especially after seeing cases where some people were caught using fake videos as proof during professional hearings or legal proceedings.This poses an ethical dilemma on unsuspecting targets who remain unaware that their likenesses could be captured on camera with only enough information available online needed!

4.Checks & Balances

While it isn’t illegal yet for nonconsensual use under most jurisdictions unless malicious intent is proven behind creating these deep fakes without due authorizations with companies sparing no expense investing huge sums towards developing Artificial Intelligence models capable identifying fraudulent content via verification mechanisms but still security experts argue rigorous application checks traditionally practised when reviewing sources should equally transposed across digital mediums regardless what state-of-the-art innovation tools exist governing usage control through policies ensuring safe deployment within society at large.

5. Required technological solutions

Deepfakes pose a potential threat with regards to privacy violations and cybercrime hence elevating the general concern over rapid growth observed in this sphere (especially during our times where facial recognition is gaining some level popularity). Although there’s no clear solution yet in sight despite researchers’ ongoing attempt towards providing comprehensive guidelines governing their ethical deployment across society at large/protecting individual rights without infringing upon them unreasonably endures still highlighting importance enhancing dissemination knowledge about current issues surrounding privacy regulations amidst Artificial Intelligence advancements urging everyone concerned to learn how to identify fraudulent sources offering unwarranted predispositions via making strategic decisions so that we might mitigate adverse cascading effects complicating matters as world transitions into an era filled with new technological complexities every day!

Artificial Intelligence Deepfake technology is a rapidly evolving field that has taken the world by storm in recent years. It has been both lauded and vilified for its stunning ability to create highly convincing digital replicas of actual people, with applications ranging from entertainment to politics.

Furthermore, even if not used nefariously outside forces can influence these manipulations within legitimate organizations like media outlets or governments casting truth into question without explanation due to “innocent” tampering–or worse yet led-believing false information is reality.

Moreover on legal grounds prosecution issues arise given that traditional laws fail where clear proof may be questionable such as manipulation via computer programs most notably through video evidence reduction validity advances creating new unprecedented litigious scenarios opening an alternative and confusing judicial system altogether.

In any event, it is incumbent upon society as a whole—policy makers included–to carefully evaluate how we leverage this powerful innovation so that we prevent misuse while simultaneously ensuring access doesn’t vanish leaving room for responsible applications benefitting humanity at large ultimately preserving ethics dignity privacy and intellectual rights in perpetuity all whilst embracing nuance over dogma during reviewal albeit said promising nature towards transformed realities .

Deepfake videos are not only used for entertainment purposes – they can be used to spread misinformation, harm reputations or even commit fraud. However, there are ways we can protect ourselves against these types of forgery.

Here are some tips on how to spot a fake video created by AI:

1) Check the source: Before trusting any piece of information you find online – especially if it’s controversial –check whether it comes from reputable sources. This could include news websites with good track records of being reliable sources, official government sites etc.

2) Examine the context: Always consider what was said before and after key points within an edited clip- does this fit with what was intended?

3) Pay attention to details: scrutinize minor details (gaps between teeth; hairlines; edge detection around faces; apparent noise around edges) probably including irregularities which tend not show up when dealing with original footage.

4) Be wary of sensational content: If a video seems too outrageous or unbelievable then trust your instincts because it most likely isn’t true!

5) Utilise available tools : Finally utilise software tools at ones disposal capable such as detecting anomalies present in deep fakes , images thus making spotting threats easier

By faking videos using artificial intelligence has become progressively common it’s important we remain vigilant the same way there are those determined to deceptively exploit technology- as knowledge evolve let us too step up our understanding in line with technological change!

Table with useful data:

| Impact | |

|---|---|

| Entertainment industry | Positive impact by enhancing visual effects in movies and advertisements. However, it also raises ethical concerns about consent and appropriateness. |

| Politics | Negative impact by spreading fake news and propaganda, damaging reputation and inciting unrest. |

| Cybersecurity | Negative impact by enabling fraud, identity theft and social engineering attacks through impersonation. |

| Law enforcement | Positive impact by improving facial recognition and criminal investigations. However, it also poses risks of false accusations and discrimination. |

| Education and research | Positive impact by enabling simulation and analysis of complex scenarios, such as medical diagnosis and climate change. However, it also requires ethical standards and data privacy protection. |

![Unlocking the Power of Social Media Technology: A Story of Success [With Data-Backed Tips for Your Business]](https://thecrazythinkers.com/wp-content/uploads/2023/05/tamlier_unsplash_Unlocking-the-Power-of-Social-Media-Technology-3A-A-Story-of-Success--5BWith-Data-Backed-Tips-for-Your-Business-5D_1683142110-768x353.webp)

![Revolutionizing Business in the 1970s: How Technology Transformed the Corporate Landscape [Expert Insights and Stats]](https://thecrazythinkers.com/wp-content/uploads/2023/05/tamlier_unsplash_Revolutionizing-Business-in-the-1970s-3A-How-Technology-Transformed-the-Corporate-Landscape--5BExpert-Insights-and-Stats-5D_1683142112-768x353.webp)

![Discover the Top 10 Most Important Technology Inventions [with Surprising Stories and Practical Solutions]](https://thecrazythinkers.com/wp-content/uploads/2023/05/tamlier_unsplash_Discover-the-Top-10-Most-Important-Technology-Inventions--5Bwith-Surprising-Stories-and-Practical-Solutions-5D_1683142113-768x353.webp)